1. Introduction

A new dataset with 12 distinct tasks and evaluation metrics that compensate for bias, so that the strengths

and limitations of algorithms can be better measured

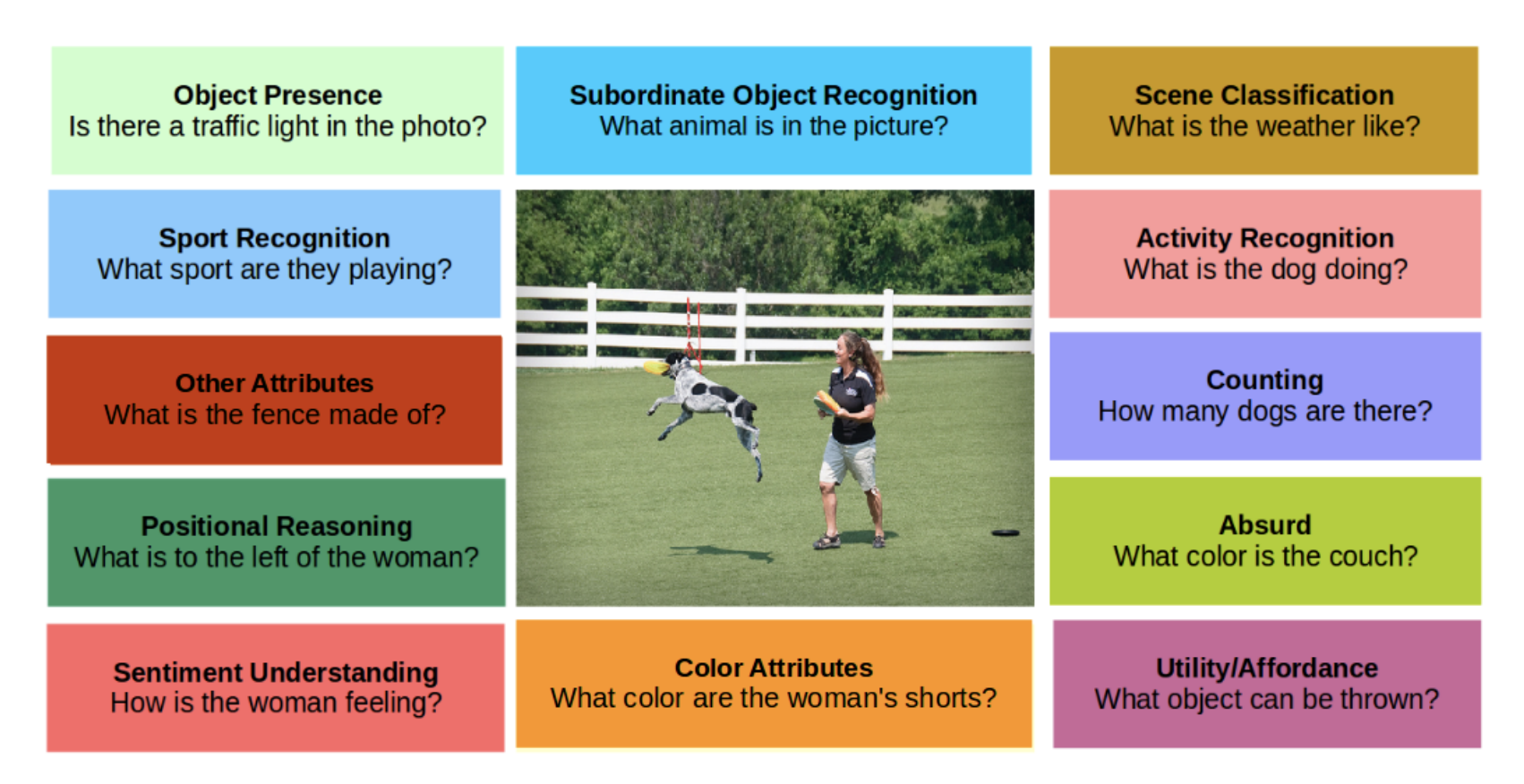

Contributions: 1) We create a new VQA benchmark dataset where questions are divided into 12 different categories based on the task they solve; 2) We propose two new evaluation metrics that compensate for forms of dataset bias; 3) We balance the number of yes/no object presence detection questions to assess whether a balanced distribution can help algorithms learn better; and 4) We introduce absurd questions that force an algorithm to determine if a question is valid for a given image.

2. Background

2.1. Prior Natural Image VQA Datasets

For COCO-VQA, a system trained using only question features achieves 50% accuracy [13]. This suggests that some questions have predictable answers.

In 2017, the VQA 2.0 [7] dataset was introduced. In VQA 2.0, the same question is asked for two different images and annotators are instructed to give opposite answers, which helped reduce language bias.

However, in addition to language bias, these datasets are also biased in their distribution of different types of questions and the distribution of answers within each question-type.

Existing VQA datasets use performance metrics that treat each test instance with equal value (e.g., simple accuracy).

For related reasons, major benchmarks released in the last decade do not use simple accuracy for evaluating image recognition and related computer vision tasks, but instead use metrics such as mean-per-class accuracy that compensates for unbalanced categories.

2.2. Synthetic Datasets that Fight Bias

Both SHAPES and CLEVR were specifically tailored for compositional language approaches [1] and downplay the importance of visual reasoning.

3. TDIUC for Nuanced VQA Analysis

The question-types are:

- Object Presence (e.g., ‘Is there a cat in the image?’)

- Subordinate Object Recognition (e.g., ‘What kind of furniture is in the picture?’)

- Counting (e.g., ’How many horses are there?’)

- Color Attributes (e.g., ‘What color is the man’s tie?’)

- Other Attributes (e.g., ‘What shape is the clock?’)

- Activity Recognition (e.g., ‘What is the girl doing?’)

- Sport Recognition (e.g.,‘What are they playing?’)

- Positional Reasoning (e.g., ‘What is to the left of the man on the sofa?’)

- Scene Classification (e.g., ‘What room is this?’)

- Sentiment Understanding (e.g.,‘How is she feeling?’)

- Object Utilities and Affordances (e.g.,‘What object can be used to break glass?’)

- Absurd (i.e., Nonsensical queries about the image)

The questions come from three sources. First, we imported a subset of questions from COCO-VQA and Visual Genome. Second, we created algorithms that generated questions from COCO’s semantic segmentation annotations [19], and Visual Genome’s objects and attributes annotations [18]. Third, we used human annotators for certain question-types. In the following sections, we briefly describe each of these methods.

3.1. Importing Questions from Existing Datasets

We imported questions from COCO-VQA and Visual Genome belonging to all question-types except ‘object utilities and affordances’. We did this by using a large number of templates and regular expressions. For Visual Genome, we imported questions that had one word answers. For COCO-VQA, we imported questions with one or two word answers and in which five or more annotators agreed.

3.2. Generating Questions using Image Annotations

3.2.1 Questions Using COCO annotations

For creating positional reasoning questions. We tried to generate above/below questions, but the results were unreliable.

3.2.2 Questions Using Visual Genome annotations

Visual Genome’s annotations contain region descriptions, relationship graphs, and object boundaries. However, the annotations can be both non-exhaustive and duplicated, which makes using them to automatically make QA pairs difficult. We only use Visual Genome to make color and positional reasoning questions. The methods we used are similar to those used with COCO, but additional precautions were needed due to quirks in their annotations.

3.4. Post Processing

Post processing was performed on questions from all sources. All numbers were converted to text, e.g., 2 became two. All answers were converted to lowercase, and trailing punctuation was stripped. Duplicate questions for the same image were removed. All questions had to have answers that appeared at least twice. The dataset was split into train and test splits with 70% for train and 30% for test.

4. Proposed Evaluation Metric

For this reason, some have argued that VQA is a kind of Visual Turing Test [21].

Our overall metrics are the arithmetic and harmonic means across all per question-type accuracies, referred to as arithmetic mean-per-type (Arithmetic MPT) accuracy and harmonic mean-per-type accuracy (Harmonic MPT). Unlike the Arithmetic MPT, Harmonic MPT measures the ability of a system to have high scores across all question-types and is skewed towards lowest performing categories.

We also use normalized metrics that compensate for bias in the form of imbalance in the distribution of answers within each question-type, e.g., the most repeated answer ‘two’ covers over 35% of all the counting-type questions. To do this, we compute the accuracy for each unique answer separately within a question-type and then average them together for the question-type. To compute overall performance, we compute the arithmetic normalized mean per-type (N-MPT) and harmonic N-MPT scores.

5. Algorithms for VQA

Almost all systems use CNN features to represent the image and either a recurrent neural network (RNN) or a bag-of-words model for the question.

The MCB system [5] won the CVPR-2016 VQA Workshop Challenge. In addition to using spatial attention, it implicitly computes the outer product between the image and question features to ensure that all of their elements interact. Explicitly computing the outer product would be slow and extremely high dimensional, so it is done using an efficient approximation. It uses an long short-term memory (LSTM) networks to embed the question.

The neural module network (NMN) is an especially interesting compositional approach to VQA [1, 2]. The main idea is to compose a series of discrete modules (sub-networks) that can be executed collectively to answer a given question. To achieve this, they use a variety of modules, e.g., the find(x) module outputs a heat map for detecting x. To arrange the modules, the question is first parsed into a concise expression (called an S-expression), e.g., ‘What is to the right of the car?’ is parsed into (what car);(what right);(what (and car right)). Using these expressions, modules are composed into a sequence to answer the query.

The multi-step recurrent answering units (RAU) model for VQA is another state-of-the-art method [23]. Each inference step in RAU consists of a complete answering block that takes in an image, a question, and the output from the previous LSTM step. Each of these is part of a larger LSTM network that progressively reasons about the question.

For image features, ResNet-152 [8] with 448×448 images was used for all models.

By inspecting Table 3, we can see that some question types are comparatively easy (> 90%) under MPT: scene recognition, sport recognition, and object presence.

High accuracy is also achieved on absurd, which we discuss in greater detail in Sec. 7.4. Subordinate object recognition is moderately high (> 80%), despite having a large number of unique answers. Accuracy on counting is low across all methods, despite a large number of training data. For the remaining question-types, more analysis is needed to pinpoint whether the weaker performance is due to lower amounts of training data, bias, or limitations of the models. We next investigate how much of the good performance is due to bias in the answer distribution, which N-MPT compensates for.

Overall performance is reported using 5 metrics. Overall (Arithmetic MPT) and Overall (Harmonic MPT) are averages of these sub-scores, providing a clearer picture of performance across question-types than simple accuracy. Overall Arithmetic N-MPT and Harmonic NMPT normalize across unique answers to better analyze the impact of answer imbalance (see Sec. 4).